CHAPTER: 3

Color Transformation / Color Space Conversion

The only good way to ensure accurate reproduction of a scene captured in Color Space A and rendered in Color Space B is to be aware of the discrepancy between capture and delivery space and to mathematically transform from one color space to the other.

When working on visual effects we need to keep a few things in mind when converting Color Space:

-

-

- Linearize everything like texture/footage (except data texture like a normal map, specular map, etc)

- Work in Linear Space inside the software (Scene-Linear)

- Set a proper gamut

-

Linear workflow and Gamma Correction

Let’s understand the first point, why do we need to Linearize our texture/footage

Video footage is typically encoded with a non-linear brightness adjustment. This can be a gamma encoded like in Rec.709 footage or a Log curve. From our previous chapter, we know why it is important.

But in Nuke or in Maya, we work in linear. Because scene linear workflows are based on real-world luminance. And if we work in real-world luminance the calculation will be perfect. Now you might be thinking what is real-world luminance? and how it is different from the luminance we see.

Let’s understand this concept with the help of the 18% Gray theory.

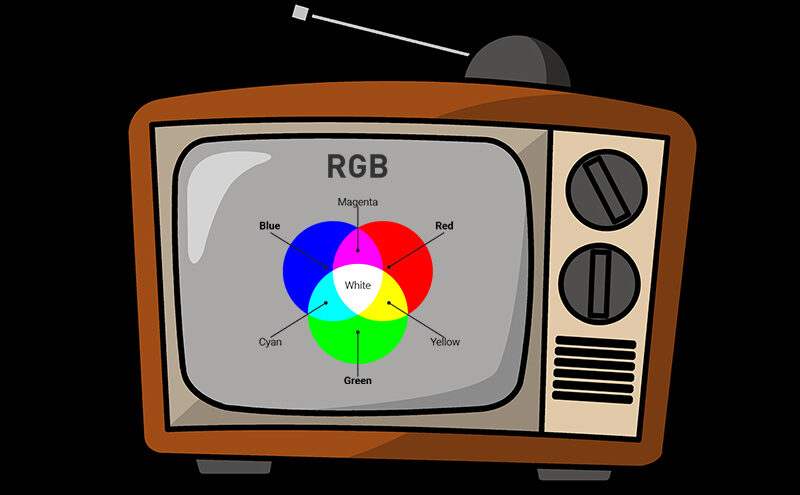

What we see as middle gray or 50% gray, in the real world (where light works linearly) the value is actually 18% gray. We see 50% gray because our eyes do not perceive light linearly (like real-world changes). Human vision has a “gamma” of sorts — a boosting curve that pumps up our perception of darkness and compresses highlights.

So basically, the 18% gray value mapped to 50% gray in our vision. Now, if we calculate the lighting calculation based on this mapped (50%) value, the lighting calculation will be wrong.

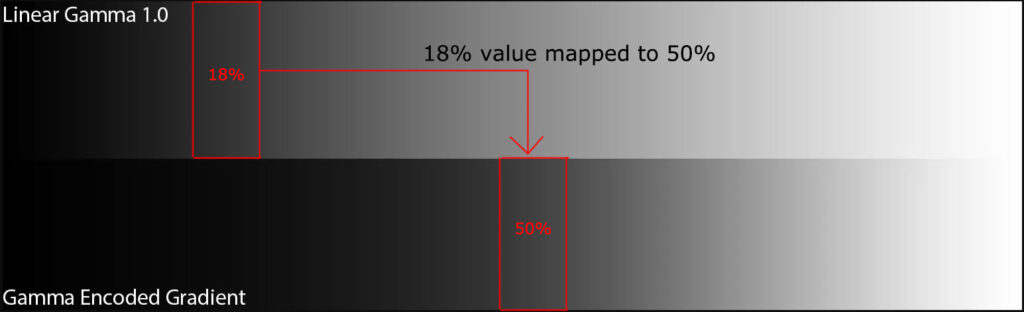

Let’s import gamma-embedded footage inside any DCC, in this case, I am importing inside Nuke and increasing the exposure of this scene by one stop (double up the intensity), and now the gray area will become completely white (predictably, the 50% region has doubled to 100%.)

But anyone who has good experience with a DSLR knows that overexposing by one stop does not slam middle gray into pure white. So, what is going wrong? why it is not behaving like real-world luminance.

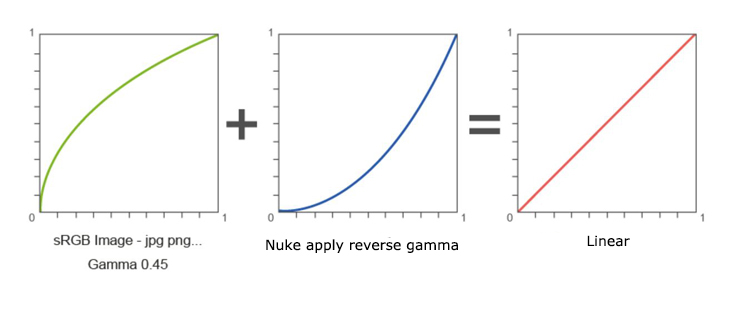

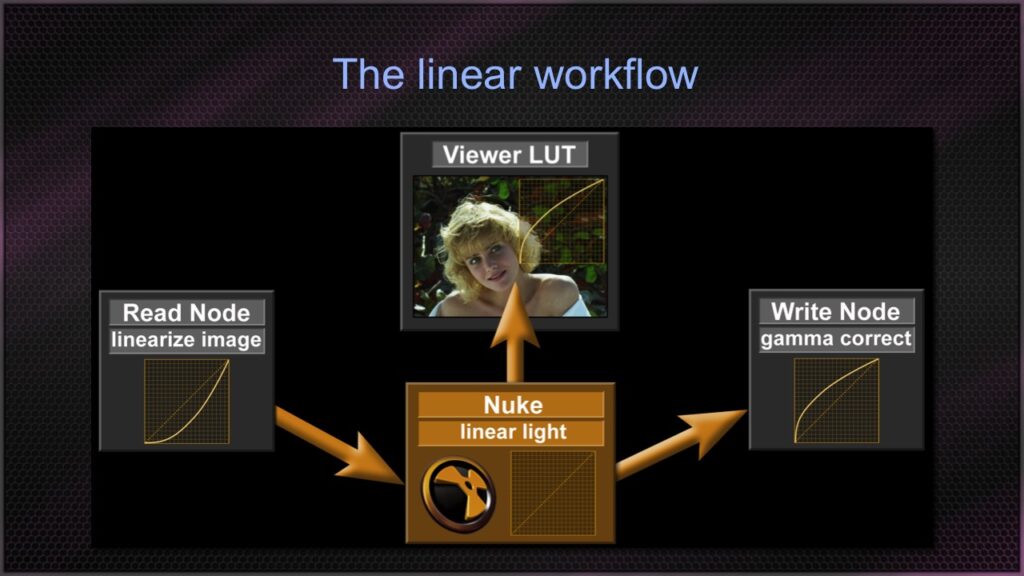

It is because Nuke works in linear space (by default), and Nuke expects footage should be linearized (apply reverse gamma) to process it correctly. This means 50% gamma should be mapped to 18% as per real-world value. Generally, when we import footage, Nuke does it automatically for us or sometimes we do it manually.

So, now if we increase the exposure of this linearized footage by one stop (double up the intensity), and convert the value back to sRGB colorspace (for viewing), the luminance of the gray area will come out as expected.

In CG/VFX industry most of everyone is too casual about using the word “Linear” to refer to “scene-linear” and “display-linear”.

Let’s understand the fact.

Scene Linear:

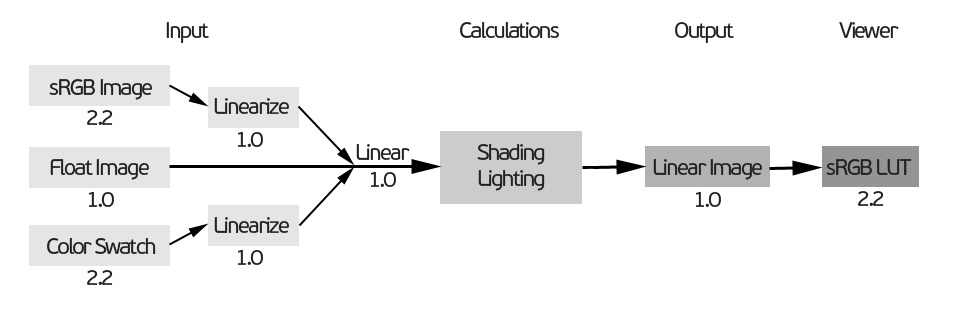

Scene Linear workflows are an approach to shading, lighting, rendering, and compositing that offers many advantages. Color management enables a linear workflow by properly converting colors for input, rendering, display, and output.

In the example, the input is display-linear and gets linearized for the lighting calculation. Then we take the scene-linear output and use tone mapping to view it properly.

Display Linear:

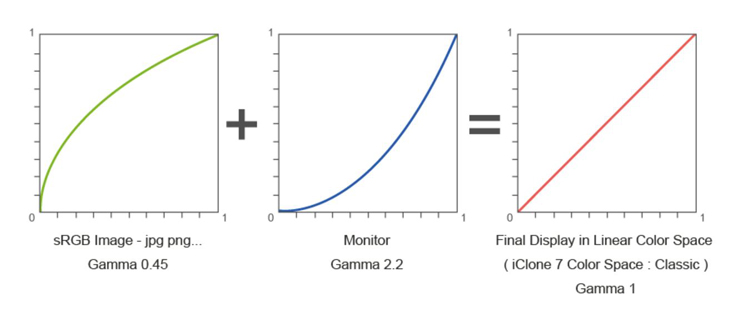

When gamma-embedded (gamma up) images are displayed on the monitor, the monitor applies reverse gamma (gamma down) to display it properly. So, what we see on our displays is actually linear light. or better to put “Display-Linear”. Since the encoding and the decoding of our images are supposed to cancel each other out.

The process that converts scene-linear to display-linear is commonly known as “Tone mapping”. But it is also called a “View Transform”, or “Output Transform”.

So, when we say that our display has a gamma 2.2 and that we save our display referred images in sRGB. It does not mean we are adding double gamma. The gamma 2.2 of our display is actually “gamma down”(EOTF function). But the gamma of 2.2 in nuke is “gamma up”.

Maya Scene Linear Workflow:

Here is a basic workflow for color management with Arnold.

Nuke Scene Linear Workflow:

In the traditional workflow, the most commonly used colorspace in VFX is linear-sRGB. As we have seen working in linear space is more mathematically correct. Let’s understand the process of nuke with an example. Let’s say we have received log footage (Arri Alexa). In nuke, compers convert log footage back to linear-sRGB (linearize the footage) then they are able to combine other elements from the CG department and matte painting department in the same linear-sRGB colorspace. That will make everything mathematically correct.

There are also some cases where we might need to convert color space while working. Some of the useful color space conversions in nuke are as follows:

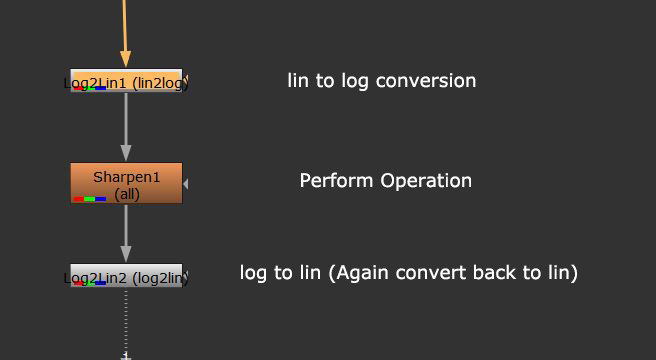

Working in Log space in nuke:

Now, let’s dive into how any of this is relevant in your day-to-day comps. Generally, plates are shoot in Log, but this plate is linearized when it enters the VFX facility so we, the humble artist, can work in a way that makes mathematical sense.

Now the obvious question would be, If plates are linearized because working in log space makes no sense, then why would I want to convert the image back again to log space?

Examples would include:

-

-

-

-

- To see more hair or edge detail over a bright background (like a sky or light source).

- To pull an accurate key for a plate shadow, so combining a CG shadow pass with it is less painful.

- Additionally, the most common example of when you would want to convert your image to log space is to prevent image filtering artifacts (e.g. when reformatting or transforming your image, sharpening your images).

-

-

-

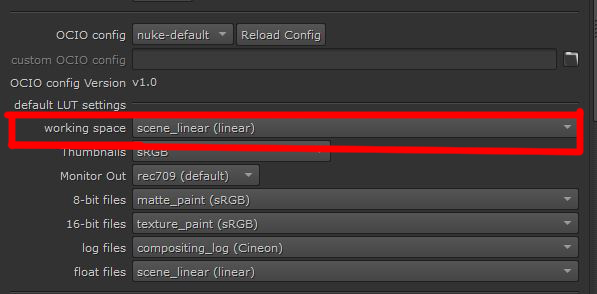

Now let’s understand the second point, work in Linear Space inside the software

Most of the DCC package works in Linear space. Nuke also works in Linear space by default. but don’t mix up with footage linearization which we have seen just now. Working in linear means, if we double the value of a node, the output amount would be doubled. And that is what we expect, right? the mathematical way.

In Nuke we can set it in project settings.

Working in non-linear space is technically possible (but normally we don’t need this, it’s just for understanding purposes) but can lead to errors whenever pixel interpolation is required. A simple grade node is enough to see the difference.

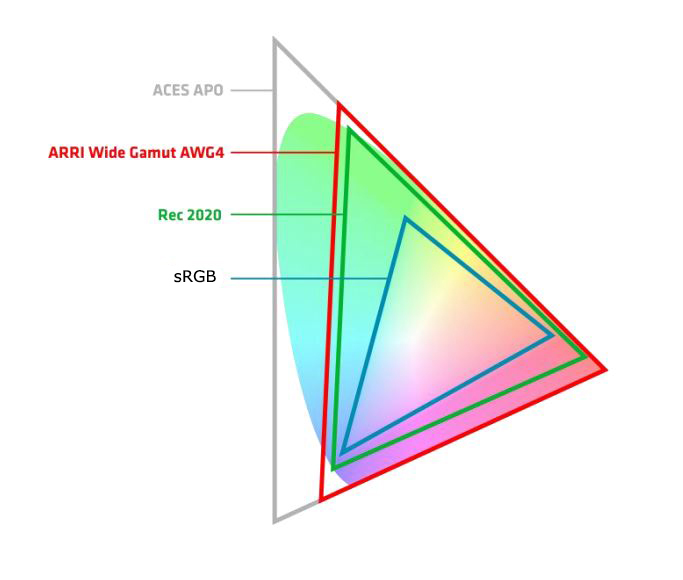

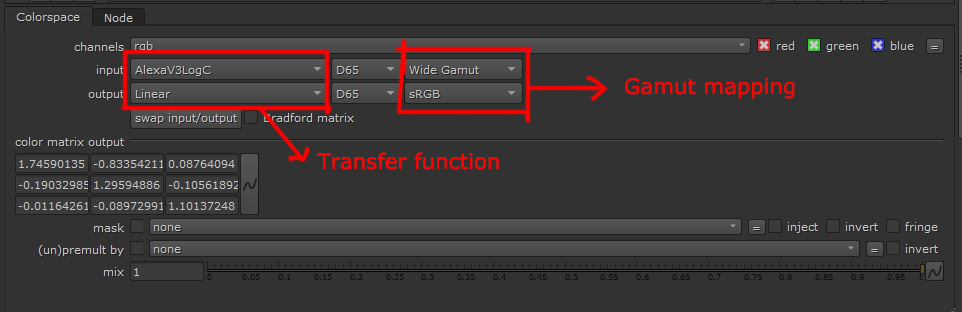

And finally, let’s understand the third point, Set a proper gamut

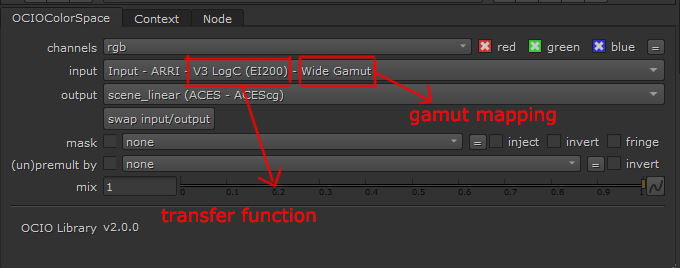

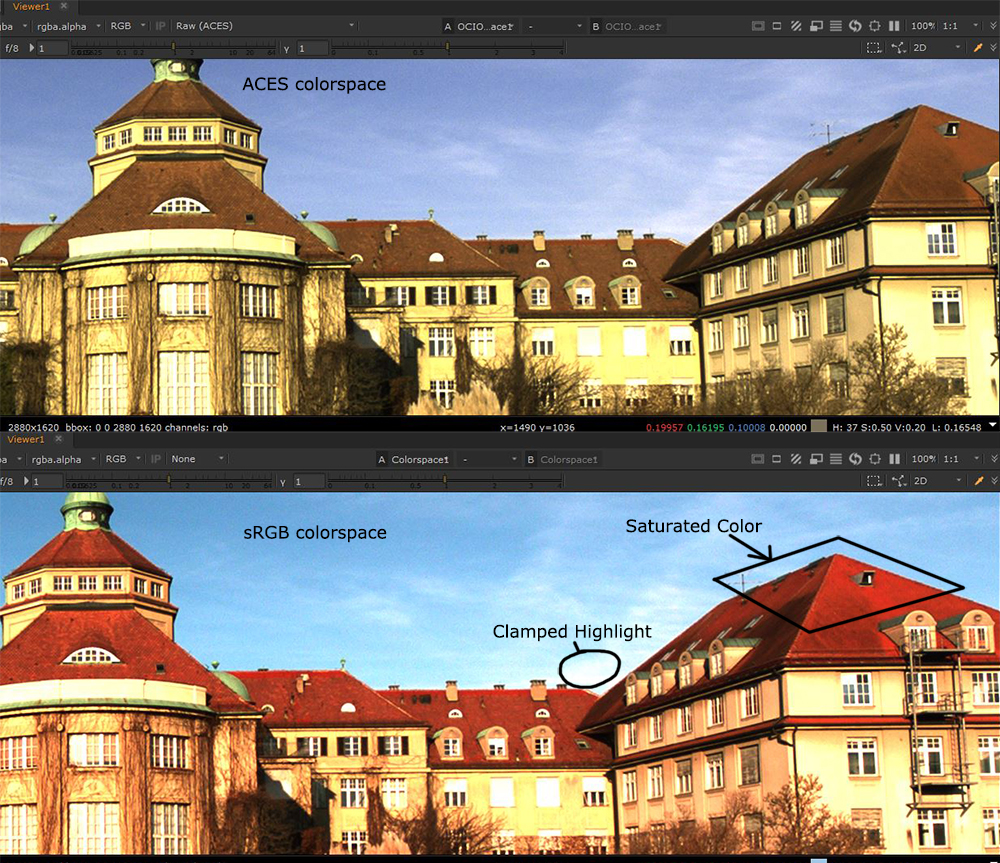

There are a bunch of professional cameras for filming and these manufacturers have their own transfer functions and gamuts. When we convert them in nuke using traditional workflow, the primaries are confined to the sRGB gamut, and this is the limitation of nuke default color management. For example, if we import Arri Alexa log footage, which has a very wide color gamut (Alexa wide gamut) compare to sRGB, the highlights will be clipped and the color will look more saturated because of the gamut compression.

Another area where many artists get confused, while converting color space manually, is the difference between transfer function and gamut conversion. Many artists do not understand the 2nd point (gamut mapping) and do not convert properly, and ended up working in the wrong color space.

The solution to this problem is ACES workflow (which we will discuss in the next chapter). In the ACES workflow, applications will convert the footage whatever its gamut is into ACES (a giant colorspace) and for the rest, we just need to do gamma correction. Most ACES color spaces are coupled transfer function + color space. So, we do not need to perform the transfer function and gamut mapping separately. Awesome.

sRGB vs ACES

What is sRGB?

sRGB is a confusing notation for many artists.

Some say: “It is a color space”. Other replies: “It is a transfer function”. sRGB is actually both. It is a color space that includes a transfer function which is a slight tweaking of the simple 2.2 gamma curve. This is why we can use gamma correction 2.2, to be linear or not.